This product was purchased using personal funds. No signed agreement or contact with the manufacturer has been made. As such, this review is presented as-is using only rasterize based rendering engines. The card’s main technology features aren’t usable in today’s games …yet. As such, this is going to be an ongoing review, a first for SFFn, and will see several updates throughout the following months.

The GeForce RTX 2080 Ti has been covered extensively on other sites as the launch of NVIDIA’s new Turing GPU architecture was hotly anticipated. Most of the unique reading material that we had planned to provide can already be found elsewhere, so I will briefly cover those unique topics in several bullet points throughout the review. The main intent of this review is to provide the community with precise measurements and performance metrics of the card for building purposes.

Now, before we get started, I would like to get some things out of the way:

- This GPU is insanely overpriced. It always costs a lot to be king and this GPU is no exception.

- This GPU is two architectures beyond Pascal but ultimately it’s just Volta Plus. “Volta is Pascal Plus, Pascal was Maxwell Plus, and so we don’t throw out the shader and start over, we are always refining. So it’s more accurate to say that Turing is Volta Plus… a bunch of stuff.” -Tom Petersen, Director of Technical Marketing at NVIDIA

- The technology known as Ray tracing that NVIDIA used to market these GPUs, is nothing new. In fact, Ray tracing is old tech that has been used in rendering since 1968 and is only now starting to become viable for the real-time calculation of light and reflection.

- What is Ray tracing? Ray tracing is a way to generate photo realistic graphics by tracing the path of light beams, simulating the way they reflect, refract, and get absorbed by the surfaces of virtual objects. This is how light functions IRL and to emulate that in-game, in real-time, will be a game changer for the entire industry.

- Make no mistake, Ray tracing IS the Holy Grail for the game developers. Jensen Huang’ cheesy RTX launch slogan, “it just works“, means that when developers eventually make the switch to Ray tracing, they won’t have to pre-bake the reflections, lighting, or shadows in every scene. This not only cuts down on dev time but it makes everything look infinitely more realistic.

- The footage we’ve been shown thus far is not 100% Ray tracing. The engines are still rasterize based with just light and reflection being handled by the Turing architecture in real-time.

- NVIDIA, AMD, and Intel have all been working on the sidelines to bring Ray tracing to the gaming industry since the mid 2000’s. It cannot come soon enough.

With that out of the way, here are the official product pages for the NVIDIA GeForce RTX 2080 Ti.

NVIDIA GeForce RTX 2080 Ti landing page

NVIDIA GeForce RTX 2080 Ti product page

Let’s get to it!

Table of Contents

Specifications

| Chipset Manufacturer | NVIDIA |

| GPU series | NVIDIA GeForce GTX 20 Series |

| GPU | GeForce GTX 2080 Ti |

| Architecture | Turing TU102 (TU102-300A-K1-A1) |

| Brand | NVIDIA |

| Model | 900-1G150-2530-000 (PG150 SKU 32) |

| CUDA cores | 4352 |

| Video Memory | 11GB GDDR6 |

| Memory Bus | 616 GB/s, 352-bit |

| Engine Clock | Base: 1350 MHz Boost: 1635 MHz |

| Memory Clock | 14 GHz / 14,000MHz / 14Gbps |

| PCI Express | 3.0 |

| Display Outputs | 3 x DisplayPort 1.4 1 x HDMI 2.0b 1 x VirtualLink (next-gen VR, 30W passthrough) |

| HDCP Support | Yes (HDCP 2.2) |

| Multi Display Capability | Quad Display |

| 4K ready | Yes |

| VR ready | Yes |

| Recommended Power Supply | 650W |

| Power Consumption | 260W |

| Power Input | 8 pin + 8 pin |

| API Support | DirectX 12 / 12.1 OpenGL 4.6 OpenCL 2.0 Vulkan 1.1.82 |

| Cooling | Dual Fan (90mm) |

| SLI Support | Yes NVLink Bridge sold separately (x8 NVLink). |

| Card Length | 10.5 inches /267 mm – 268mm as measured |

| Card Width | Dual-Slot – 39.27mm as measured |

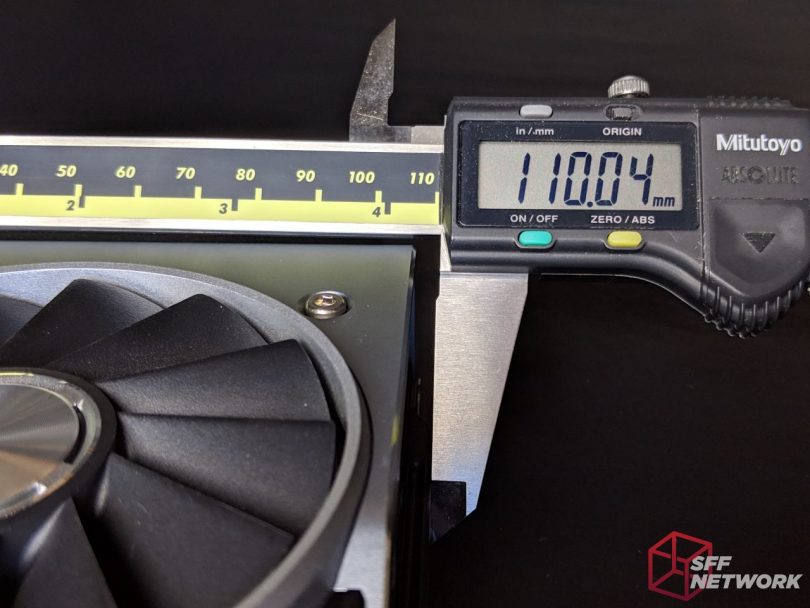

| Card Height | 4.556” / 115.7mm – 110.04mm as measured |

| Weight | 1270g (2.78LBS) |

| Accessories | DP to DVI adapter User Manuals |

| Warranty | 3 years |

| Price | $1,199 (MSRP) |

Packaging and Contents

Black and green. Nothing fancy.

The included bundle is quite lacking, especially for a card of this caliber. There is no promotional material, no coupon codes, no game codes, no case badge, no SLI bridge (now a $90 NVLink bridge), no poster, and no driver media.

While there have been several jokes about it, I personally find the GeForce RTX 2080 Ti appealing to look at as the matte finish of the materials compliment eachother. The body itself appears to be made out of extruded aluminum and just like last time, Green is the only color these LEDs will ever shine.

This black shield in the center of the card is the false cover that needs to be popped off should you ever wish to disassemble the GPU.

Credit goes to Steve Burke at Gamer’s Nexus for figuring that out (video).

Measurements

Top: EVGA GTX1080 Ti FTW3

Middle: EVGA GTX1080 Ti SC2

Bottom: NVIDIA RTX2080 Ti FE

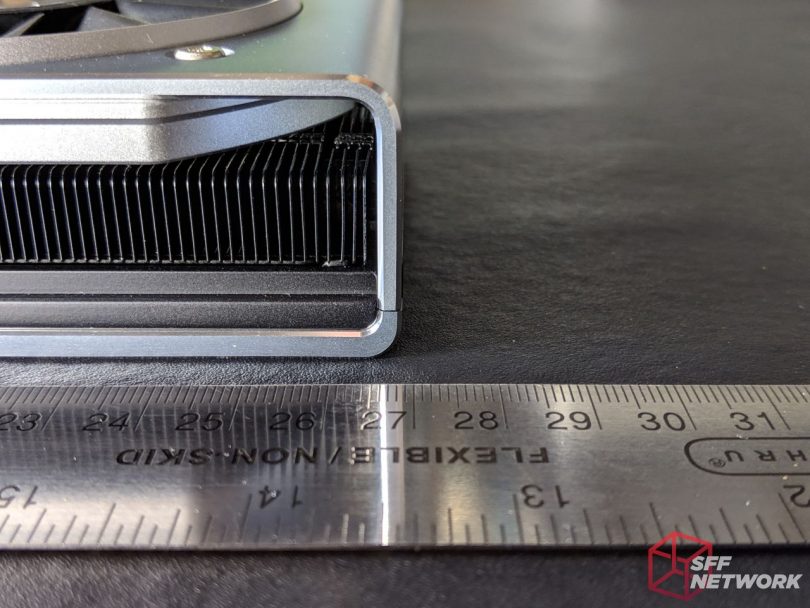

268mm in length. Measured from the inner side of the PCIe bracket.

39.27mm at it’s thickest point. The aluminum that surrounds that fans protrudes outwards.

The card is 110.04mm tall. Measured from the top most point of the copper contacts on the PCIe, to the very top of the NV Link bridge cover.

The 13-blade fans are 85mm wide. The fans are made by Asia Vital Components (AVC), and the fan is model DAPA0815B2UP001.

AVC fan model decode (thanks John!):

D – Axial Fan

A – General type

P – Partner/Proprietary??

A – Gen A

08 – Dimension – 8cm

15 – Thickness – 15mm

B – two ball bearing

2 – 12V

U – Extra High Speed rotation

P – PWM

001 – Revision??

This model may very well be proprietary as it was not available in their current fan catalogue. The fan uses a 3-phase 6-pole motor rated at 12v 0.60A. As decoded, they are double-ball bearing PWM fans and the sound signature is pleasant (like a Noctua). Try as I might, even hotboxing the card up to 89c, the fans were relatively quiet compared other fan profiles.

The GeForce RTX 2080 Ti is far smaller and slightly more powerful than the GTX 1080 Ti resting below it.

Test Setup

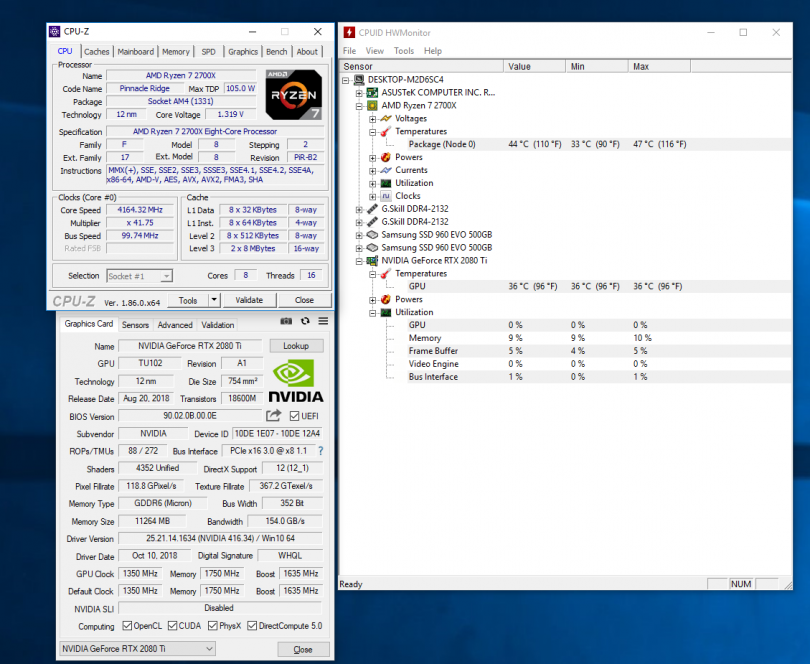

The test bench we have set up is a high end system.

This system was designed to help showcase the kind of computer this card’s target audience would have, without bottlenecking the GPU.

The overclock is a mild GPU clock overclock that every single GeForce RTX 2080 Ti should be capable of.

Overclocking the memory will yield better results but I’m not sure if all the 3rd party cards use the same GDDR6 memory and would get the same performance.

Also, in EVGA’s Precision X1 utility, the Voltage slider doesn’t work so we’re unable to really push the limits at this time.

Far better scores when overclocked can be obtained but it will vary from one card to another.

Test bench:

CPU: AMD Ryzen 2700x (8c/16t)

Motherboard: ASUS ROG STRIX X470-I GAMING

Memory: 32GB of DDR4 @ 3200MHz (C14, 2x16GB)

Storage: 2x Samsung 960 EVO 500GB

Cooling: Corsair H115i Platinum /Noctua NH-L12

Power Supply: SilverStone SX800-LTI

Chassis: Chimera MachOne Prototype

Monitor: Alienware AW3418DW 34″ WQHD(3440×1440) Curved(1900R) 4ms(GTG) 120Hz(G-Sync) IPS 21:9

Software Used:

Fresh Windows 10 install (ver. 1803)

NVIDIA GeForce Driver v416.34

CPU-Z 1.86.0

GPU-Z 2.11.0

EVGA Precision X1 0.3.3.0

HWMonitor 1.35

FRAPS 3.5.99

FRAFS 0.3.0.3

System Tweaks:

GeForce Experience Disabled.

G-Sync Disabled.

Win 10 “High Performance” power mode.

Win 10 Game Bar/DVR disabled.

“Turn on Fast Startup” disabled.

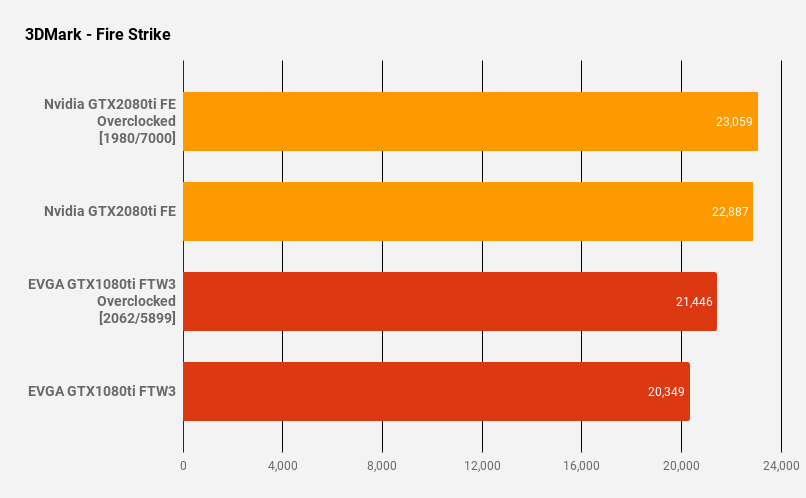

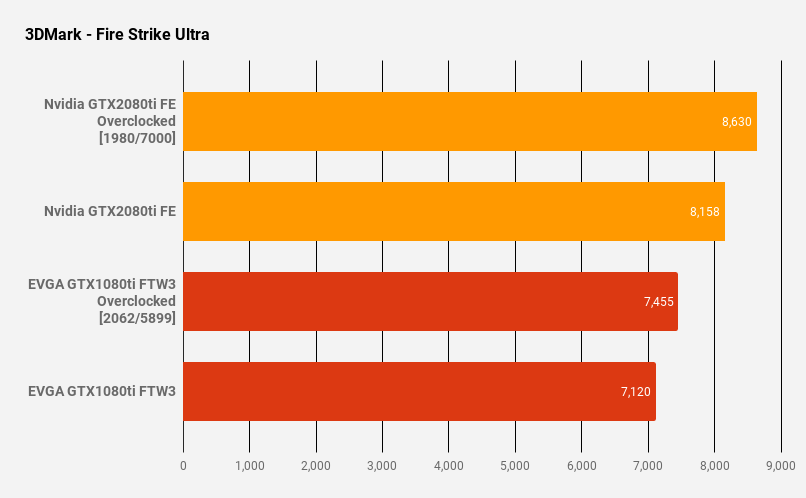

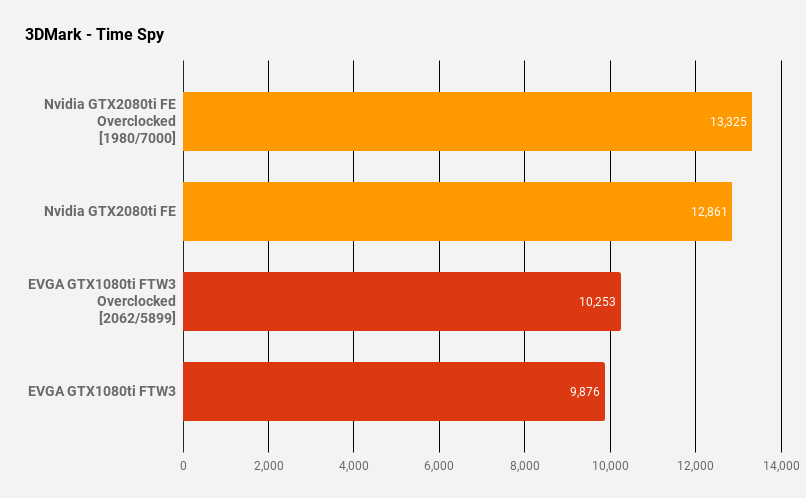

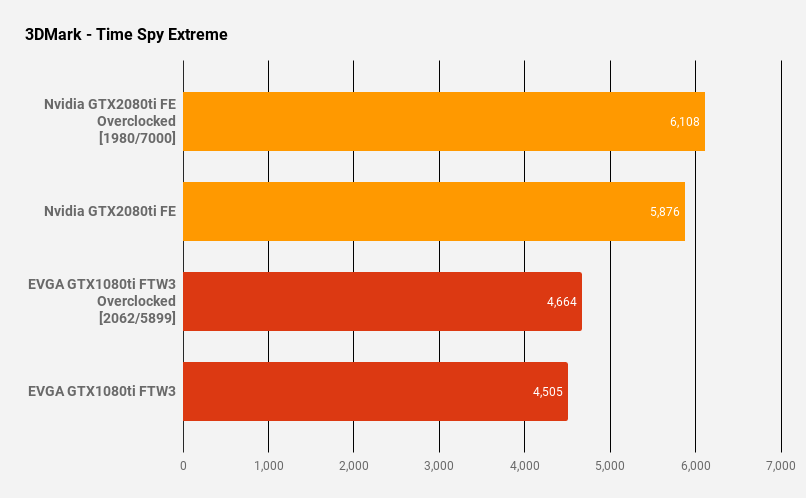

Synthetic benchmarks tested:

3DMark Suite

– (DX11) Fire Strike

– (DX11) Fire Strike Ultra

– (DX12) Time Spy

– (DX12) Time Spy Extreme

(DX11) Unigine Superposition

Games tested:

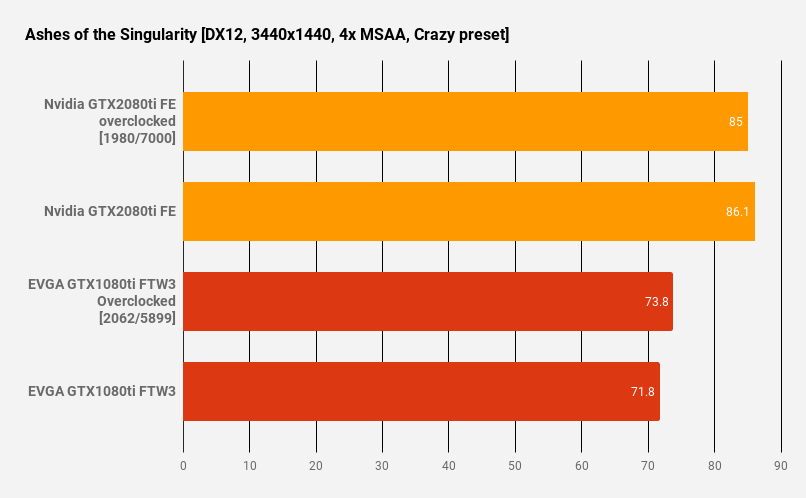

(DX12) Ashes of the Singularity

(DX12) Assetto Corsa Competizione

(DX11) Dirt Rally

(DX12) Forza Horizon 4

(DX12) Hitman (2016)

(DX12) Kingdom Come Deliverance

(DX12) Rise of the Tomb Raider

Graphics Cards tested:

EVGA GTX1080 Ti FTW3

EVGA GTX1080 Ti FTW3 overclocked to 2062/5899 (mild)

NVIDIA RTX2080 Ti FE

NVIDIA RTX2080 Ti FE overclocked to 1980/7000 (mild)

Resolutions tested:

3440 x 1440 21:9

Testing Methodology

For our tests, only the average framerates at a resolution of 3440×1440 were collected from each title.

Where possible, we used Fraps benchmarking mode to capture 60 seconds of footage and then use Frafs to collect the data.

Why 3440×1440 resolution?

3440×1440 @ 120Hz is the sweet spot between 1080p @ 144 and 4k @ 60.

To help showcase the kind of performance SFFn members can expect, we used M.2 SSDs. As a result, the bus interface is PCIe 3.0 x8 and not x16.

I’ll talk more in-depth about this after the results.

To reiterate, the GPU overclocks we used are mild. Every single GPU should be capable of attaining these clocks. Greater performance can be obtained by overclocking the memory but the gains may vary from one card to the next.

Game Benchmarks

The GeForce RTX 2080 Ti is 19.90% faster than the 1080ti FTW3 in Ashes of the Singularity.

It’s 16.66% faster than the overclocked 1080ti FTW3q

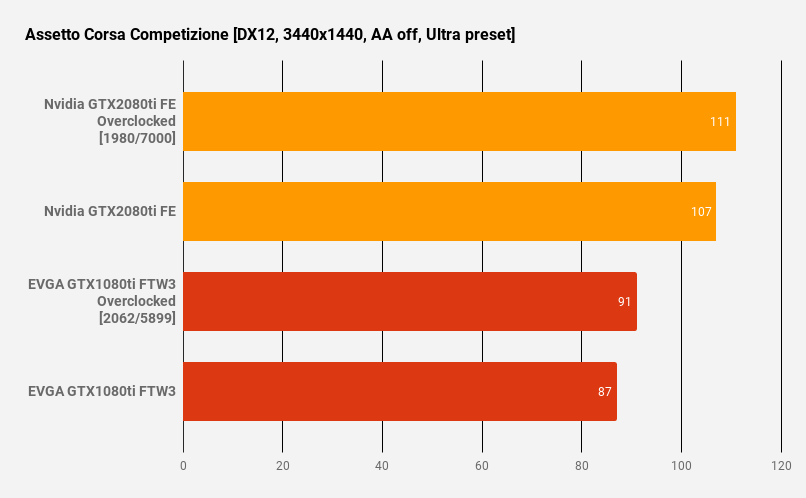

The GeForce RTX 2080 Ti is 22.90% faster than the 1080ti FTW3 in Assetto Corsa Competizione

It’s 17.58% faster than the overclocked 1080ti FTW3

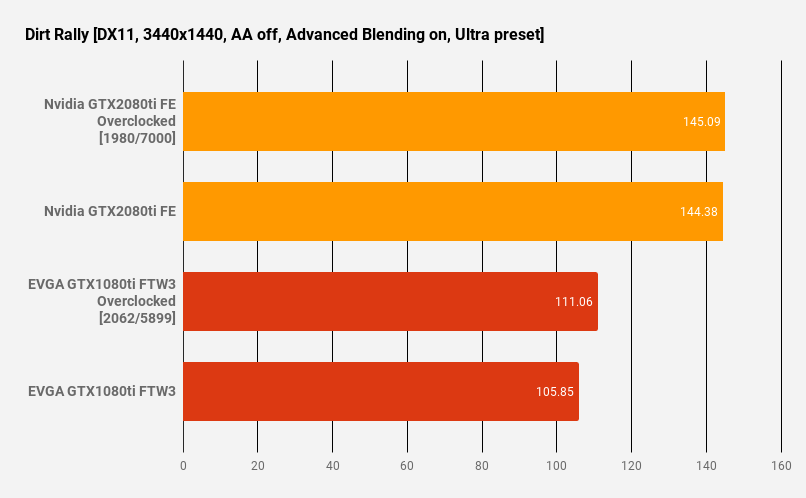

The GeForce RTX 2080 Ti is 36.40% faster than the 1080ti FTW3 in Dirt Rally

It’s 30.00% faster than the overclocked 1080ti FTW3

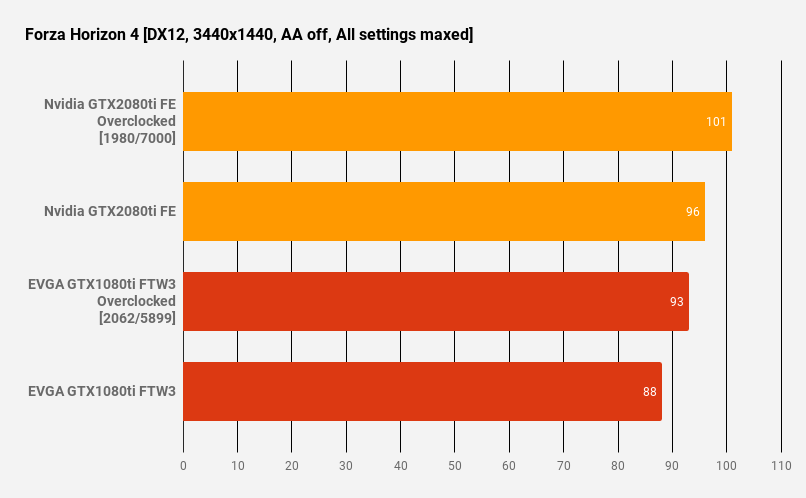

The GeForce RTX 2080 Ti is 9.09% faster than the 1080ti FTW3 in Forza Horizon 4

It’s 3.2% faster than the overclocked 1080ti FTW3

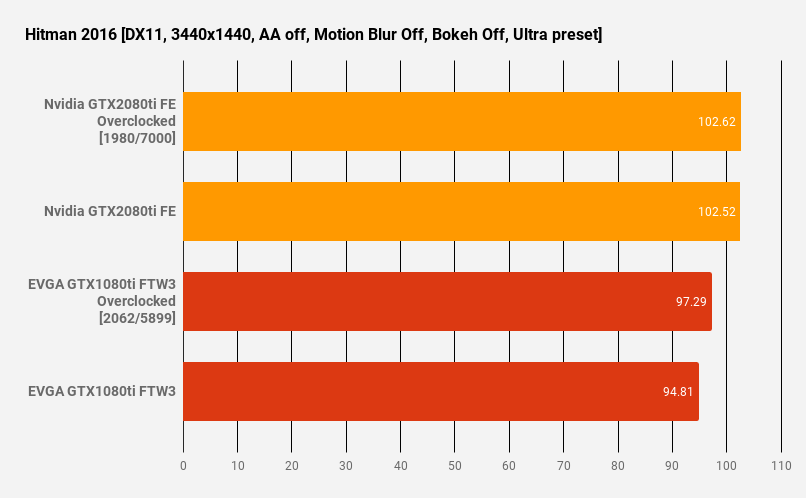

The GeForce RTX 2080 Ti is 8.10% faster than the 1080ti FTW3 in Hitman [2016]

It’s 5.37% faster than the overclocked 1080ti FTW3

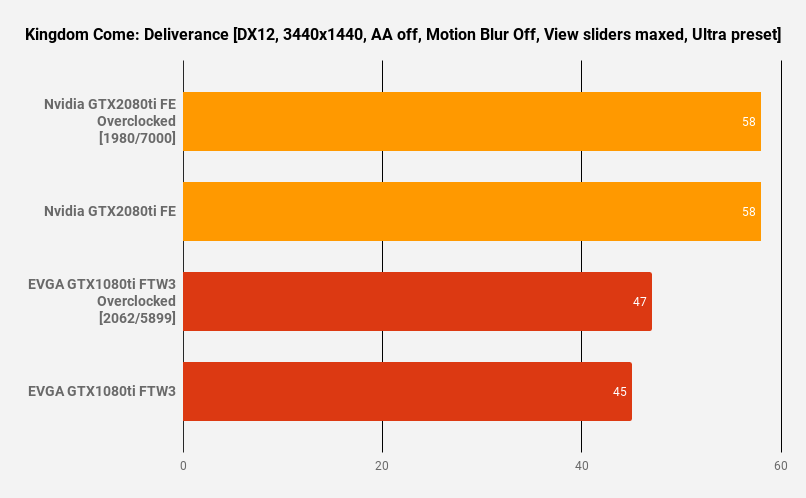

The GeForce RTX 2080 Ti is 28.80% faster than the 1080ti FTW3 in Kingdom Come Deliverance.

It’s 23.40% faster than the overclocked 1080ti FTW3

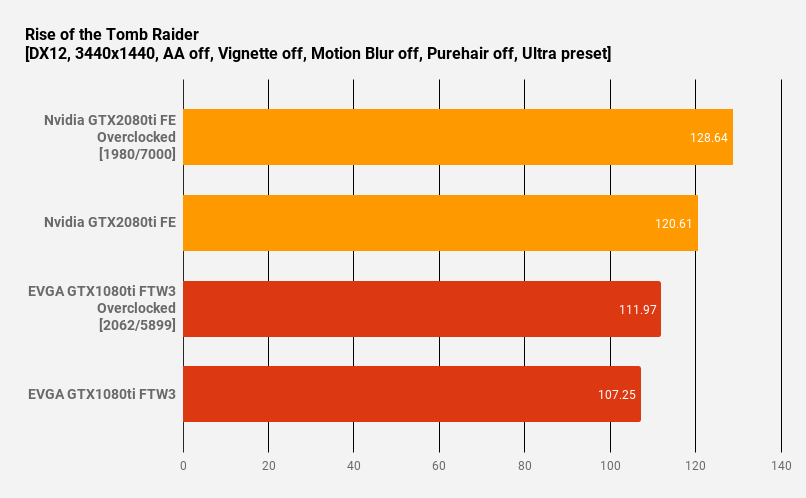

The GeForce RTX 2080 Ti is 12.45% faster than the 1080ti FTW3 in Rise of the Tomb Raider

It’s 7.71% faster than the overclocked 1080ti FTW3

DLSS game results

Coming soon!

RTX game results

Coming soon!

Synthetic Benchmarks

Synthetic benchmarks are an excellent way to utilize the full strength of a graphics card. These tools help to showcase how powerful a GPU can really be, but are not an accurate representation of the kind of the performance you can expect in-game.

The GeForce RTX 2080 Ti is 12.47% faster than the 1080ti FTW3 in Fire Strike

It’s 6.71% faster than the overclocked 1080ti FTW3

The GeForce RTX 2080 Ti is 14.50% faster than the 1080ti FTW3 in Fire Strike Ultra

It’s 9.42% faster than the overclocked 1080ti FTW3

The GeForce RTX 2080 Ti is 30.11% faster than the 1080ti FTW3 in Time Spy

It’s 25.43% faster than the overclocked 1080ti FTW3

The GeForce RTX 2080 Ti is 30.40% faster than the 1080ti FTW3 in Time Spy Extreme

It’s 25.98% faster than the overclocked 1080ti FTW3

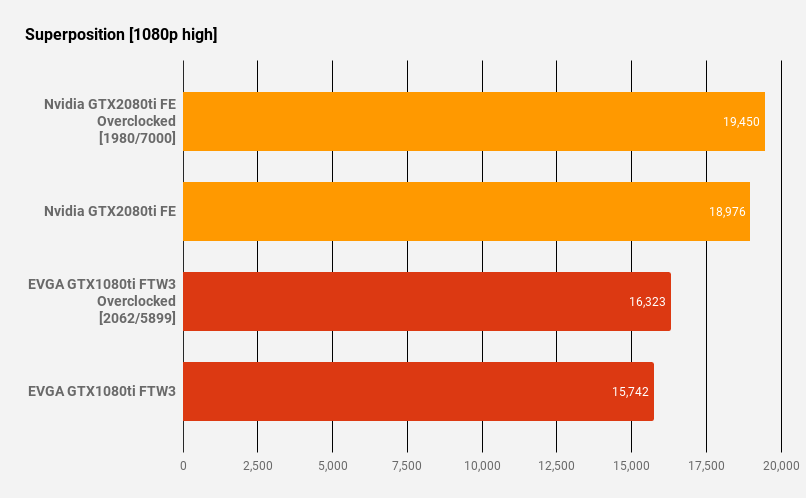

The GeForce RTX 2080 Ti is 20.54% faster than the 1080ti FTW3 in Superposition HIGH

It’s 16.25% faster than the overclocked 1080ti FTW3

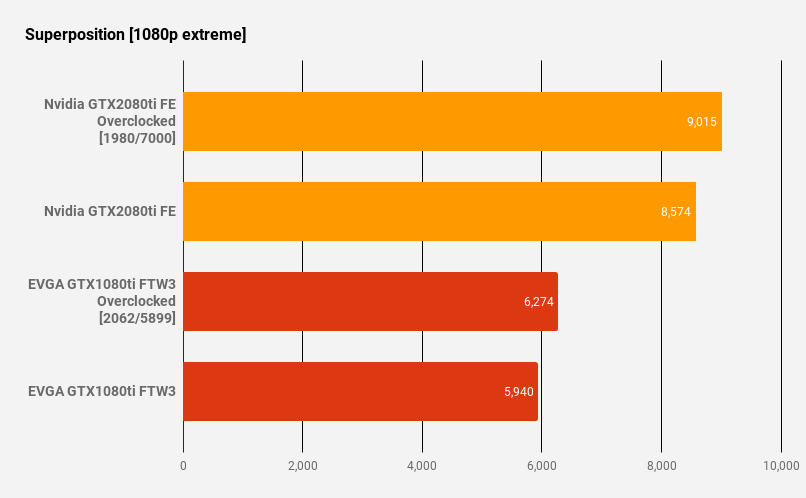

The GeForce RTX 2080 Ti is 44.34% faster than the 1080ti FTW3 in Superposition EXTREME

It’s 36.65% faster than the overclocked 1080ti FTW3

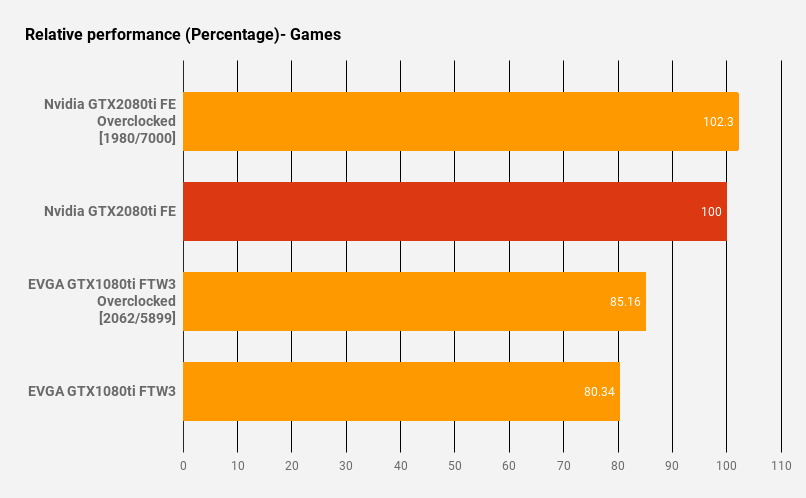

Benchmark Summary

In game benchmarks, the GeForce RTX 2080 Ti on average was 19.66% faster than the 1080ti FTW3.

That leap grew to 22.30% when Overclocked.

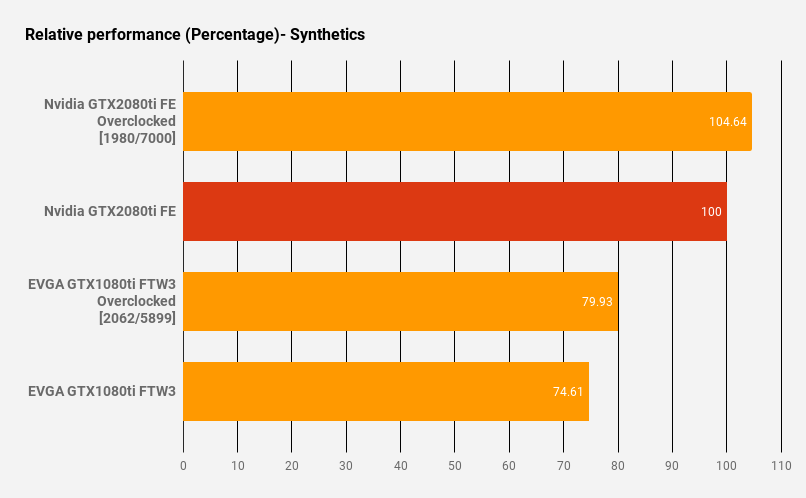

In synthetic benchmarks, the GeForce RTX 2080 Ti on average was 25.39% faster than the 1080ti FTW3.

That leap grew to 30.03% when Overclocked.

Noise Levels

Coming soon!

Thermals

Coming soon!

Power Consumption

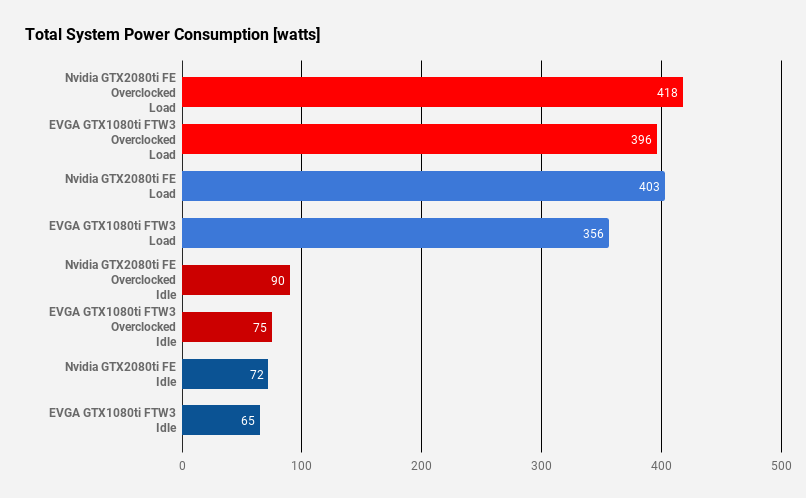

Power Consumption was measured by plugging the test system into an unoccupied CyberPower CP1500PFCLCD PFC Sinewave UPS 1500VA 900W UPS. Accurate to within a single watt and able to display values in real-time, it’s the most accurate tool that I have for this job.

This graph illustrates the peak voltage consumption when gaming.

With the CPU and GPU set to 100% load, the NVIDIA GeForce RTX 2080 Ti setup consumed 494 watts.

Oddly enough, under the same load, the EVGA GeForce GTX 1080 Ti FTW3 setup consumed 518 watts -more than 2080 Ti

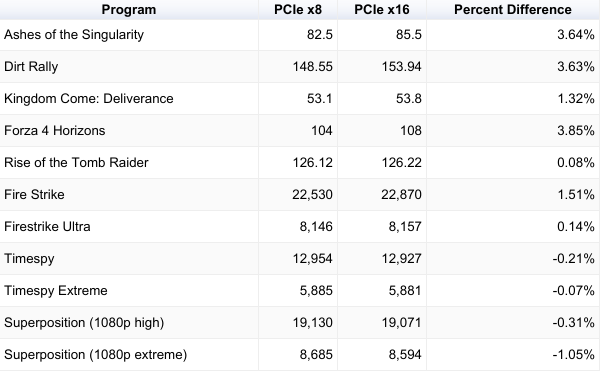

PCI-Express Scaling (x8 vs x16)

I wanted to be sure to include this in the review because I feel that PCI-Express Scaling is a really important detail to note for the SFF community.

Due to how compact our builds are, many members of our community opt out of using 2.5″ SSDs due to the unnecessary clutter they create and the additional space that they consume. Instead, most everyone prefers the use of M.2 SSDs as they create no clutter and consume no space. As a result of this decision, some users might be running their only PCIe 3.0 x16 lane at x8 (you can confirm this using GPU-Z and looking at the Bus Interface). This causes no measurable impact on performance for previous generation cards like the GeForce GTX 1080ti but with the GeForce RTX 2080ti, there are some small but measurable losses.

I want to bring mention to a terrific article W1zzard posted on PCI-Express Scaling using the 2080ti that can be found here.

My initial goal was to beat him to the punch as their previous PCI-Express scaling article came out in December of 2017. However, with the increased bandwidth of the GeForce RTX 2080 Ti, I guess they saw fit to capture and report their findings early on and the results are conclusive -the 2080ti is the first GPU that can consume all the bandwidth of a PCIe 3.0 x16 lane.

The performance differences between PCIe bandwidth configurations are more pronounced at lower resolutions than 4K, which isn’t new. We saw this in every previous PCI-Express scaling test. The underlying reason is that the framerate is the primary driver of PCIe bandwidth, not the resolution

My results, with 2x m.2’s installed(running at x8 for this results above), mirrored W1zzard’s findings with roughly a 2-3% drop in performance at 3440×1440 resolution (with some results within the margin of error). To help add clarity to this issue, the 2nd m.2 slot on the ASUS ROG STRIX X470-I GAMING is shared with the PCIe x16 slot so by utilizing it for additional storage capacity, I’ve dropped the speed down to x8. As soon as that 2nd m.2 is removed, the PCIe x16 slot goes right back up to x16 speed.

At this time, we’re not sure how many other boards might be electrically similar so an earlier version of this section has been altered and outright removed to improve accuracy.

We apologize for any false information, I could have researched more into the issue but I think it would warrant an entire article by itself.

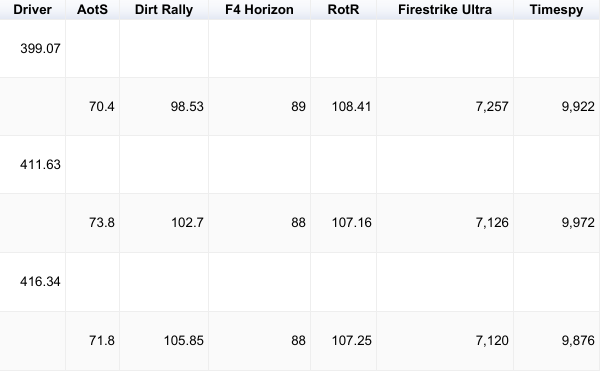

Driver Nerfing for Pascal GPUs

There were a lot of rumors swirling around that NVIDIA was releasing drivers that were intentionally nerfing the performance of NVIDIA’s previous architectures to make the 2080 Ti look more desirable. To combat or even prove these rumors, I took it upon myself to run a few benchmarks with each driver release using the GTX 1080 Ti. In the end, the rumors proved false as the results were well within the margin of error.

The Price

This topic deserved its very own section as it’s ultimately the main complaint against this entire series -mine included.

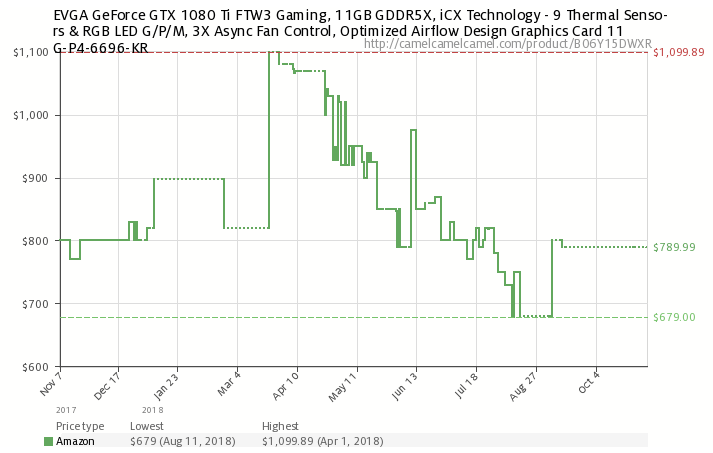

I want to start this section by saying that the price I paid for the GeForce RTX 2080 Ti was a whopping 55.74% more expensive than the EVGA GTX 1080 Ti FTW3 that I purchased on August 14th of 2017 (both cards include tax + shipping). That’s a tremendous jump in price when you consider that the GeForce RTX 2080 Ti was only 25.39% faster in our gaming benchmarks. However, it would be remiss of me to exclude all the factors that lead up to this pricepoint, but before I continue, just know that I will not be defending NVIDIA for this abhorrent price point.

To help understand today’s market, let’s go as far back as the early 2000’s, when flagship GPUs like ATI’s 9800XT(2003) and NVIDIA’s 6800 Ultra(2004) were sold for $499. Adjusted for inflation that’s $680.02 and $667.47 respectively and as a result, this is the price-point that we have come to expect for a flagship GPU.

Now, let’s step forward many generations later to the GeForce 9 series -MAXWELL (before Turing, Volta, and Pascal)

September 18, 2014 – GTX 980 – $549

March 17, 2015 – GeForce GTX TITAN X – $999

June 2, 2015 – GTX 980 Ti – $649

MAXWELL was then replaced by the GeForce 10 series -PASCAL

May 27, 2016 – GTX 1080 – $549 ($699 FE)

August 2, 2016 – NVIDIA TITAN X – $1200

March 10, 2017 – GTX 1080 Ti – $699 ($699 FE)

By now you should be able to see and perhaps even remember NVIDIA’s launch trend. Each overpriced TITAN X release was followed shortly after by a far more affordable consumer GPU that performed nearly identical to it in benchmarks, effectively killing the sales of TITAN cards in each generation. There is absolutely no way the marketing and sales team at NVIDIA didn’t see this coming so it was most likely a planned strategy that helped consumers justify the price point of each new Ti-series GPU. The mistakes that happened between those generations might explain why the 2080 Ti’s launch price is so high.

When the 1000 series was launched, NVIDIA was left with no choice but to slash the prices of the 9 series by nearly 20% (source) in order to help clear inventory. However, many articles were quick to stop recommending the GTX 980 Ti as the newer GTX 1070 (released June 10th, 2017) was priced well below it, yet outperformed even the TITAN X(Maxwell). If that wasn’t bad enough, the more affordably priced GTX 980 was taken out back when AMD launched their $200 RX 480 that matched it frame for frame in performance (and bested it after further driver improvements). I cannot imagine that NVIDIA was happy to see an entire line of old stock no longer moving. I wouldn’t be surprised if this is when NVIDIA decided to change their generational launch strategy moving forward either.

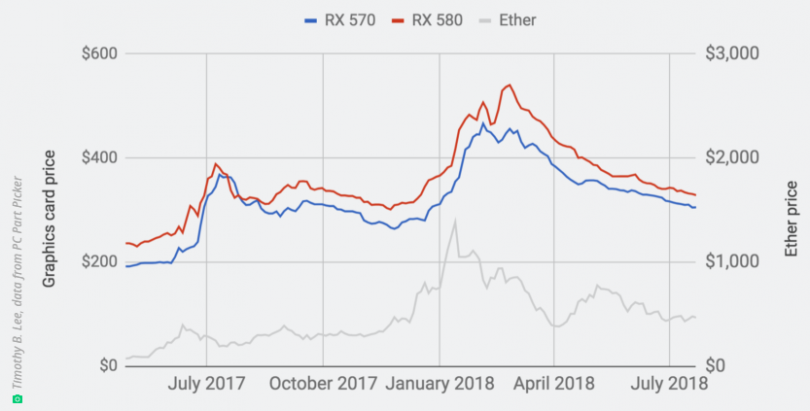

Fast-forward to mid-2017 when Etherium happened. Affordable GPUs like AMD’s newly released RX 570/580 more than doubled in price come the start of 2018. The GTX 1080 Ti jumped to $900 come New Years and up to nearly $1100 in March. It was a dark time for system builders.

In such a horribly inflated market, NVIDIA surprise launched a new GPU architecture and flagship card -VOLTA.

December 7, 2017 – TITAN V – $3000

Out of reach to most, this new architecture was a glimpse of Turing’s capability, offering roughly a 15%-18% advantage over the 1080 Ti (at ~3x the price). Then in late September of 2018, a few months after the Etherium crash, when GPU prices were just starting to normalize, NVIDIA officially launched this 2000 series -TURING. Not willing the make the same generation-jump mistake they made before with the 9 and 10 series, and with absolutely no reason to, the current landscape of consumer GPUs only helped NVIDIA to determine their target price. With the launch of Turing, NVIDIA’s aging GTX 1080 Ti still had no competition from AMD and I would imagine that NVIDIA was content watching their previous generation of cards sell at the same MSRP set in 2016. Capable of offering greater performance than the TITAN V (now 1 architecture generation behind), as long as they set Turing’s price to anything more than the $699 1080 Ti and less than the $3000 TITAN V, it would surely have no problem selling AND wouldn’t cannibalize the sales of the previous generation.

A few articles hinted to it but by setting the GeForce RTX 2080 Ti’s price to what the TITAN-series previously occupied, NVIDIA only helped to promote the sales of their remaining stock by making that $699 pricetag seem that much more appealing.

Anyway, that’s a very long winded explanation of their pricing scheme but I personally think it’s inexcusable as something closer to ~$899 would have been abusive enough. Sure it has that newfangled GDDR6 memory, the RT cores, and those Tensor Cores but without any modern titles or even a tech demo that can be used to showcase this massive architectural accomplishment, I’m left wondering what half of this technology I paid for can actually do.

Worse yet, if 12nm is replaced by 7nm(Ampere) by the start of 2020, this generation may never truly get a chance to shine.

Technology

We have a few topics to discuss here and I will keep them brief: DLSS, Ray tracing, and VirtualLink. At present, the card supports them all but nothing is available to utilize them.

DLSS is being advertised as a way to make supported games run approximately 40% faster.

How? From what I understand, DLSS appears to be rendering the scene at a lower resolution, upscaling it, and using AI to make the picture closely match the resolution that it’s set to emulate.

In most cases, the quality difference won’t be noticeable, but the supporting title will run at higher framerates than it would at that higher resolution.

In theory, it sounds very compelling -an absolute dream for improved performance in 4k/VR, but there isn’t much information to go off of. Nobody quite knows how this technology works so we’re left scratching our heads and guessing at this point.

I covered Ray tracing at the top of the article already but there aren’t enough words to illustrate how crucial this technology is to the future of games -Ray tracing is truly a game changer. While NVIDIA is the first to officially market its capabilities, DX12 and VULKAN do support it, and you can bet that Ray Tracing is going to be all the rage over the next few years. If the extreme price of this card wasn’t such an issue, and this technology was readily available in a plethora of titles, the graphics alone would sell itself like Crysis and Half-Life 2 did back in the day.

VirtualLink is the lightweight high bandwidth input set to replace all bulky cables in today’s VR headsets -no more HDMI. Every big player who has a stake in the future of VR has agreed to use this single open standard and that includes AMD, NVIDIA, Valve, Microsoft, Vive, and Oculus. This VirtualLink standard makes it easier to support a wider array of devices such as Laptops and Tablets, allowing the next-gen VR headsets to be cross-compatible. Capable of carrying Audio, Video, USB 3.1 Gen2, and passing through up to 27 watts of power, this single thin USB C port will greatly simplify the next-generation VR experience.

At this point, you might be asking, “Why not go wireless?” and the answer is simple, there doesn’t exist a consumer-grade wireless solution that is safe to mount on your head that uses little power to transmit that next level of information. Intel’s WiGig, used in the wireless accessory for the Vive and Vive Pro (and requires an available PCIe slot -gross!), is only capable of 7 Gbps(theoretical) while VirtualLink is capable of 32.4Gbps. As they push the resolution, the refresh rate, the color depth, and the response rate of movement, the more that bandwidth that will be needed. As a result, VirtualLink provides more than enough bandwidth to allow 4K-resolution video at 120Hz with 8 bits per color -ideal for next-gen VR.

Conclusion

Price aside, the GeForce RTX 2080 Ti is definitely terrific performer in today’s titles, there is no denying that. However, we’re nearly two months past its launch and still left wondering what the card is actually capable of. We’ve seen a potential higher rate of failure with this generation (source), three RTX capable titles have been release but don’t currently have Ray tracing implemented, and future DLSS/RT support for Final Fantasy XV has been cancelled altogether (source). So far, the tech it’s supposed to bring to market is off to a rough start.

We will update this review regularly but the best conclusion I can offer is that if you want the best, this is it.

Pros

I define “Pros” as things that I really liked about the GPU; things that made me sit back and really appreciate the quality or how unique the product is.

- It’s the fastest consumer-grade GPU available.

- No RGB – just green.

- The cards aesthetic is terrific.

- The size of the FE is perfect for high-end SFF builds.

- Terrific fan choice.

- Excellent thermal performance.

- VirtualLink support for next-gen VR headsets.

- DEATH TO DVI!

Nitpicks

No review would be complete without a little bit of nitpicking and while a few of these are just that, some of them are more obvious and could have been completely avoided by the manufacturer.

- Overbuilt -do not try to disassemble.

- Included bundle is lacking: No accessories, software, discounts, game codes, poster, or case badge included.

- No RGB – just green.

- Power hungry.

- No tech demos available to showcase RTX technology (https://www.nvidia.com/coolstuff/demos).

Cons

I define “Cons” as unforgivable issues that could have a permanent impact on the usability of the product. These are issues that if removed, would make for a near perfect design.

- Price.

- Features unavailable at launch.

Please let us know what you think about this article on the forums.

Edited 11/13/2018 with accuracy and clarification from community member EdZ and |||