ALL PHOTO CREDIT - COOLER MASTER

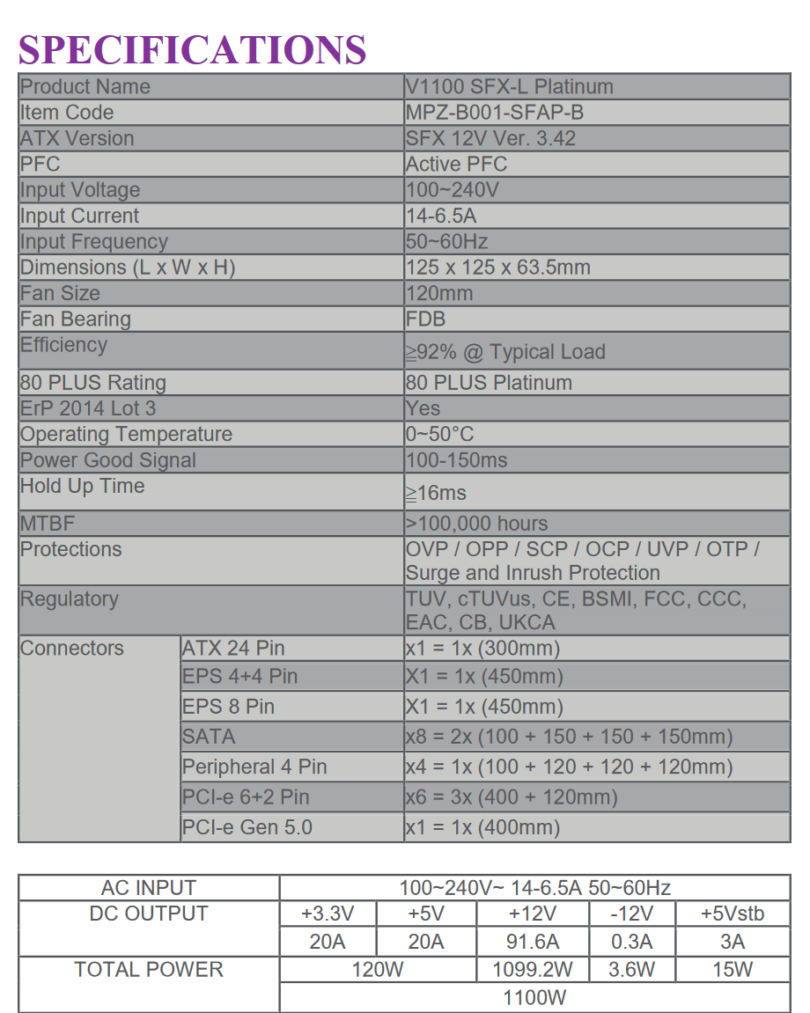

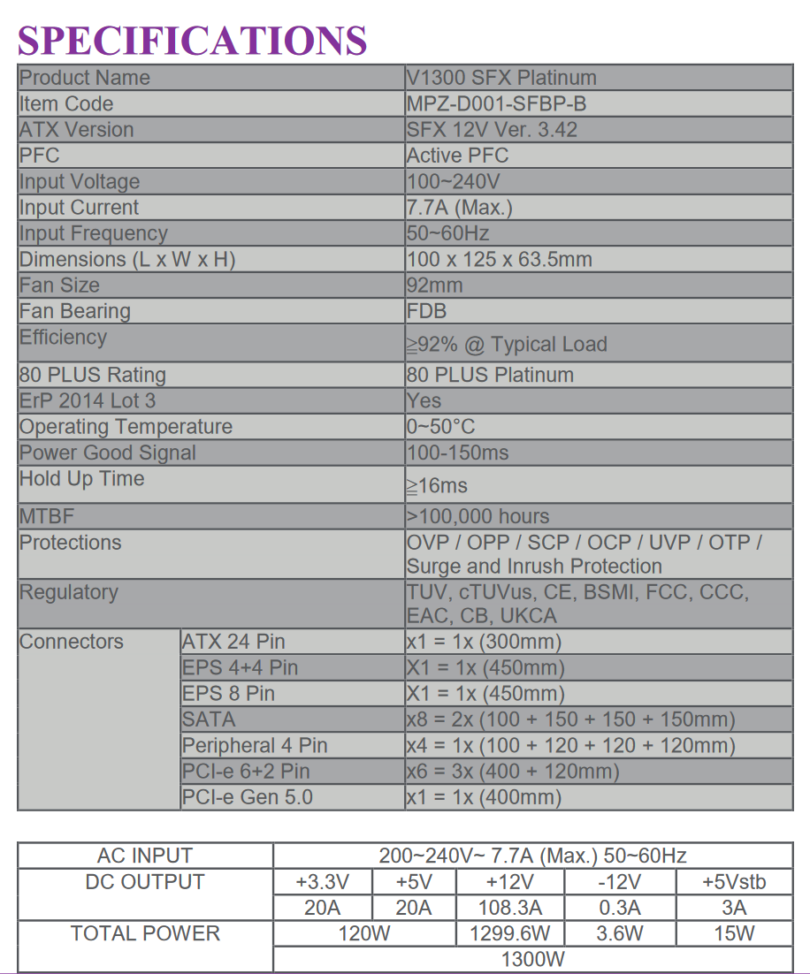

Not content with simply having the most powerful SFX PSUs, Cooler Master has shown the new SFX-L V series models. Like their smaller SFX brothers, the V1100 SFX-L Platinum and V1300 SFX-L Platinum are both 80 Plus Platinum certified, and feature 1100 and 1300 watts respectively. Both also feature three PCI-E 6+2 connectors for six connections, and a PCI-E 5.0 connector. What separates them from the SFX units is that the SFX-L units have a 120mm FDB bearing fan instead of a smaller 92mm unit. In theory, this should allow for quieter operation under load.

Currently, the most powerful SFX-L PSU is the Silverstone SX1000 Platinum, which we recently used to build our 12900K / RTX 3080 SFF machine. The SX1000 is dead quiet up to about 200 watts. It will be interesting to see if Cooler Master can push that silence barrier further with their V-Series.

Here are the full specs for the PSUs:

V1100 SFX-L Platinum

V1300 SFX-L Platinum

You can check out the official website by clicking HERE. However, there are some errors on it at the time of writing. I recommend you download the spec sheets located HERE.